Beyond Single Agents: How LaMMA-P Solves the Coordination Puzzle in Multi-Robot Teams

Large Language Models (LLMs) have revolutionized robotics, granting machines an unprecedented ability to understand and act on complex human instructions. Their elegance lies in translating ambiguous natural language into concrete action plans. However, this powerful foundation has an Achilles' heel: coordinating a team of specialized, heterogeneous robots over long-horizon tasks. When multiple robots with different skills must collaborate on a complex sequence of actions, standard LLM-based approaches often falter, struggling with efficient task allocation and dependency management.

This article explores the strengths and future potential of the Language Model-Driven Multi-Agent PDDL Planner (LaMMA-P), a novel framework designed by the researchers of Trustworthy Autonomous Systems Laboratory (TASL) at the University of California, Riverside to overcome this critical flaw. By ingeniously blending the reasoning power of LLMs with the structured rigor of classical planning, LaMMA-P forges a new path for creating truly collaborative, intelligent, and private AI systems that can tackle complex, real-world challenges.

A Refresher on the Basics: LLMs as Robot Brains

Before diving into LaMMA-P's architecture, it's essential to understand the standard approach it improves upon. Typically, LLMs are used in robotics as high-level "translators." The process generally looks like this:

Human Command: A user provides a high-level instruction in natural language, like, "Prepare breakfast and clean the kitchen."

LLM Interpretation: The LLM parses this command, breaking it down into a sequence of probable steps (e.g., get eggs, find a pan, cook, throw away trash).

Code Generation: The LLM then translates these steps into executable code or commands that the robot's control system can understand.

Execution: The robot executes the commands sequentially.

This approach works well for a single robot performing relatively straightforward tasks. The problem arises when the complexity scales up.

The Flaw in the Foundation: The Coordination Blindspot

The primary limitation of relying solely on LLMs for multi-robot planning is their difficulty with what the paper calls cooperative heterogeneous robot teams. This challenge, which we can call the "Coordination Blindspot," manifests in several ways:

Inefficient Task Allocation: How do you decide which robot does what, especially when they have different skills? Robot A can pick things up, while Robot B can only flip switches. An LLM might struggle to assign sub-tasks optimally.

Dependency Management: Some tasks must be done sequentially (you can't close the drawer before putting the watch inside), while others can be done in parallel to save time. Standard LLM planners are not inherently optimized to manage these complex dependencies efficiently.

Lack of Verifiability: An LLM generates a plan based on probabilistic reasoning. It doesn't guarantee the plan is optimal or even valid within the physical constraints of the environment. This can lead to errors and failed tasks.

The Solution: LaMMA-P's Hybrid Architecture

LaMMA-P addresses the coordination blindspot by integrating LLMs with a classical, symbolic planner based on the Planning Domain Definition Language (PDDL). PDDL is a formal language used to represent planning problems in a structured way that computers can solve logically and efficiently.

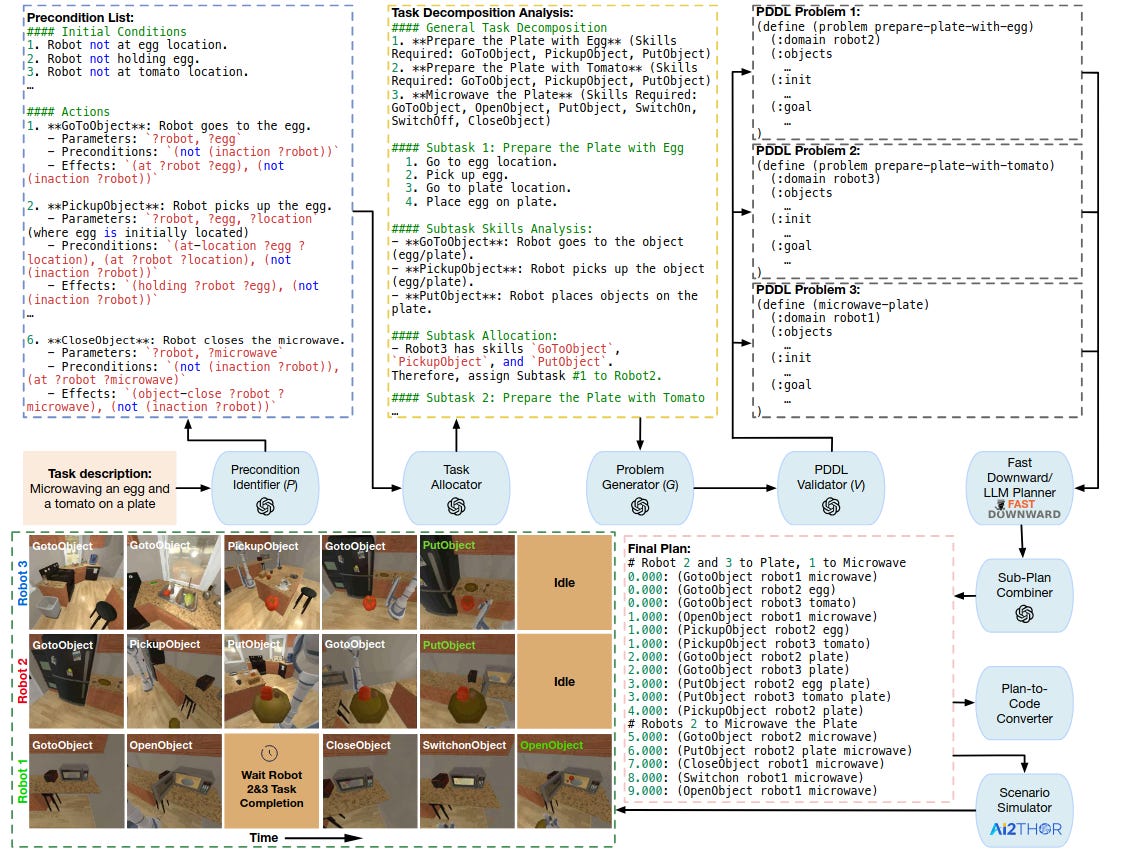

LaMMA-P’s modular architecture masterfully divides the labor between the LLM and the PDDL planner:

The LLM as the "Master Strategist": The LLM handles the high-level reasoning. Given a complex command, it excels at:

Task Decomposition: Breaking down the main goal into logical sub-tasks

(e.g., "Prepare the Plate with Egg," "Microwave the Plate").

Skill Analysis: Identifying the skills required for each sub-task

(e.g.,

GoToObject,PickupObject).Task Allocation: Assigning each sub-task to the most suitable robot based on its predefined skill set.

Source: https://doi.org/10.48550/arXiv.2409.20560

PDDL as the "Logistics Expert": Once the LLM has allocated sub-tasks, the PDDL components take over to handle the low-level, verifiable planning:

Problem Generation: It translates each sub-task into a formal PDDL problem file, defining the initial state, objects, and goal for that specific task.

Validation: A PDDL Validator ensures the generated problem files are correct and executable, preventing errors before they happen.

Planning: The Fast Downward planner, a highly efficient heuristic search planner, generates the optimal sequence of actions for each robot to solve its assigned PDDL problem.

Plan Combination: Finally, an LLM-powered Sub-Plan Combiner intelligently merges the individual robot plans, optimizing for parallel execution while respecting dependencies to create a single, synchronized final plan.

This hybrid system uses each component for what it does best: the LLM for creative reasoning and language understanding, and the PDDL planner for logical, efficient, and verifiable action sequencing.

The Proven Advantages

The paper's experiments, conducted on the new and challenging

MAT-THOR benchmark, demonstrate LaMMA-P's clear superiority over previous methods.

Superior Performance and Success Rate: LaMMA-P achieved a 105% higher success rate than the state-of-the-art baseline, SMART-LLM. This drastic improvement comes from its ability to correctly decompose tasks and generate valid, executable plans, especially as task complexity increases.

Enhanced Efficiency and Coordination: The framework also proved to be 36% more efficient. By identifying parallelizable tasks and using an optimal planner, LaMMA-P ensures robots work together seamlessly, minimizing idle time and completing tasks faster. The system effectively manages dependencies, allowing one robot to wait for another to complete a prerequisite action, as seen in the drawer-opening task.

Robust Generalization: The system shows a stronger ability to handle vague commands. While other models failed completely on these tasks, LaMMA-P was still able to infer the user's intent and generate a successful plan, highlighting the robust reasoning facilitated by its structured approach.

Modular Strength: The ablation study confirms that every module contributes significantly to the overall performance. Adding predefined domain knowledge (D), the Problem Generator (G), the Validator (V), and the Precondition Identifier (P) progressively improved success rates and efficiency, proving the thoughtful design of the architecture.

The Road Ahead: From Static Labs to Dynamic Worlds

While LaMMA-P marks a significant advancement, its design is based on a foundational assumption common in robotics research: the environment is fully observable and static, and the composition of the robot team is pre-defined. This means the system assumes it has perfect knowledge of the world, that nothing will change unexpectedly, and that it knows exactly which robots are available and what they can do from the outset. In the real world, this is rarely the case. The paper's authors and the broader research field identify these as key limitations, pointing toward critical areas for future work that would bridge the gap between the lab and real-world application.

1. Integrating Advanced Perception with Vision-Language Models (VLMs): The current LaMMA-P framework operates like a chess engine—it knows the exact position of every piece on the board at all times. However, a real-world environment is more like a bustling city street, where information is incomplete and constantly changing.

The Current Limitation: The system relies on a predefined, symbolic representation of the world (e.g.,

(at-location Egg Location1)). It doesn't "see" the egg; it's simply told where the egg is. This works perfectly in a simulated, controlled setting like the AI2-THOR environment, but it cannot function in an unstructured space where object locations are not known in advance.The Proposed Solution: Future work aims to incorporate vision-language models (VLMs) to give the system robust perceptual capabilities. VLMs are a class of AI models that can interpret and reason about both visual data (images, video) and text.

2. Developing Adaptive Re-planning for Dynamic Scenarios : The second major area for improvement addresses the "static" part of the core assumption. LaMMA-P generates a complete, optimized plan from start to finish and then executes it. This is highly efficient but brittle; if anything goes wrong, the entire plan can fail.

The Current Limitation: The framework lacks a mechanism to handle unexpected events or execution failures. If a robot tries to pick up a tomato and fails, or if a human walks by and closes a door the plan required to be open, the system cannot adjust. It will continue trying to execute an invalid plan, leading to task failure.

3. Dynamic Team and Skill Discovery : A major leap would be to remove the dependency on pre-defined knowledge about the robot team. Currently, the system must be told exactly how many robots are participating and what each robot's specific skills are before planning can begin. A truly autonomous system should be able to figure this out on its own.

The Current Limitation: The framework is configured for a fixed team with known capabilities. This setup is rigid and not scalable. It cannot accommodate a robot running out of battery or a new robot joining the team mid-task. Every robot's skills must be manually encoded in a PDDL domain file, which is a significant barrier to using new or unfamiliar hardware.

4. Skill Learning and Domain Adaptation : The most advanced future direction would be to empower the robots themselves to expand their capabilities. Currently, the robot skills are static and based on pre-defined PDDL domains. A robot can only perform actions that have been explicitly programmed for it.

The Current Limitation: The framework's ability to solve problems is fundamentally capped by its initial, hard-coded library of skills. It cannot solve a novel task that requires a new type of action it hasn't been taught (e.g., "whisk an egg" or "fold a towel"). This limits the system's long-term autonomy and adaptability.

Conclusion

LaMMA-P represents a significant leap forward in multi-agent robotics. By moving beyond the limitations of pure LLM-based planning, it demonstrates that the future of intelligent robotics lies in hybrid systems that combine the strengths of large-scale neural reasoning with the precision of classical, symbolic AI. Its architecture provides a robust, efficient, and generalizable solution to the complex puzzle of multi-robot coordination.

While challenges remain in adapting this framework to the messiness of the real world, LaMMA-P lays a powerful foundation. It charts a clear course toward a future where teams of heterogeneous robots can collaborate autonomously to solve long-horizon tasks, unlocking new possibilities in everything from warehouse automation to household assistance.

Further Reading and References

Title:

PDDL- the planning domain definition languageAuthors: C. Aeronautiques, A. Howe, C. Knoblock, et al.

Publication: Technical Report, 1998

Annotation: This is the foundational paper that introduced PDDL. Understanding it provides crucial context for the "classical planning" half of the LaMMA-P framework and why it brings structure and verifiability to AI planning.

Title:

The fast downward planning systemAuthors: M. Helmert

Publication: Journal of Artificial Intelligence Research, 2006

Annotation: This paper details the Fast Downward planner used in LaMMA-P. It explains the heuristic search mechanisms that allow the system to find efficient and optimal action sequences for the sub-tasks defined by the LLM.

Title:

Smart-llm: Smart multi-agent robot task planning using large language modelsAuthors: S. S. Kannan, V. L. Venkatesh, and B.-C. Min

Publication: The 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

Annotation: This paper is presented as the primary state-of-the-art baseline against which LaMMA-P is compared. Reading it helps clarify the specific limitations of prior LLM-only approaches that LaMMA-P was designed to solve.